Object Reconstruction with Depth Error Compensation Using Azure Kinect

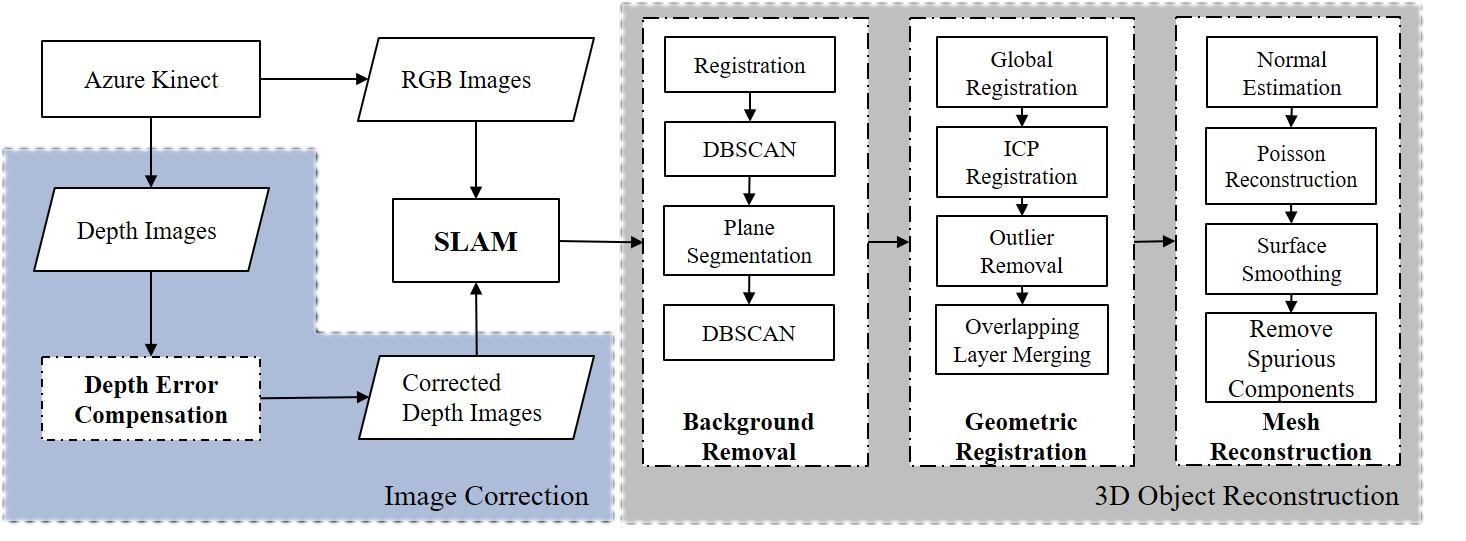

Framework of object reconstruction with depth error compensation using Azure Kinect

Abstract

Classical 3D reconstruction methods have the built-in problem of not being able to separate the object of interest from the rest of the scene. Most methods also suffer from shape distortion caused by inaccurate depth measurement or estimation. In this paper, we present a method for creating precise object meshes automatically based on existing SLAM frameworks (ORB-SLAM2 & BADSLAM) alongside a learning-based depth error compensation mechanism for Time-of-Flight cameras. We demonstrate the effectiveness of our method by using the Azure Kinect RGBD camera to reconstruct various objects. We also conduct experiments showing better performance of our depth error compensation mechanism compared with the classical depth correction method adopted by BADSLAM.Paper: [PDF] Appendix: [PDF] Code: [GitHub]

Framework of depth error compensation

Experiment Results

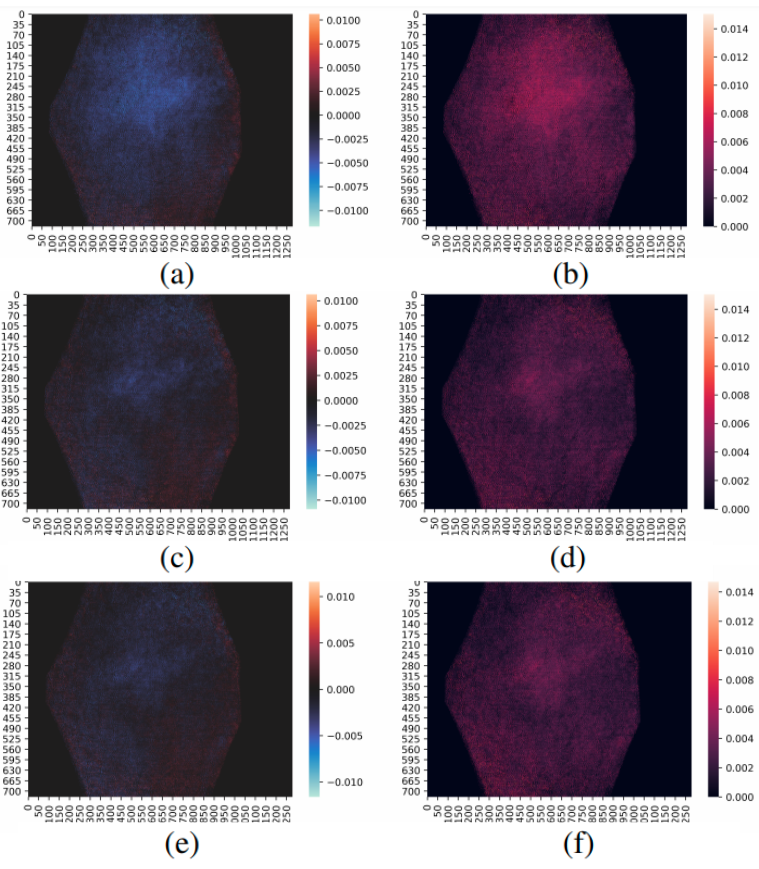

Left: 3D surface reconstruction of a person (left) and a face (right). The top row are input point clouds, the middle row are reconstructed mesh using Poisson Surface Recon- struction, the bottom row are ones after cropping the redun- dant parts. Right: Mean (left column) and standard deviation (right column) of depth error at each pixel among 100 original images (a)-(b) and corrected images using neural network (c)-(d) and random forest (e)-(f)